ModelOptimizer.ai™

For business users focused on generating acceptable ROI from their AI initiatives, ModelOptimizer.ai™ provides LLM usage monitoring, cost control, analysis and reporting by processing environment, by query, by microservice or application, by model and by account.

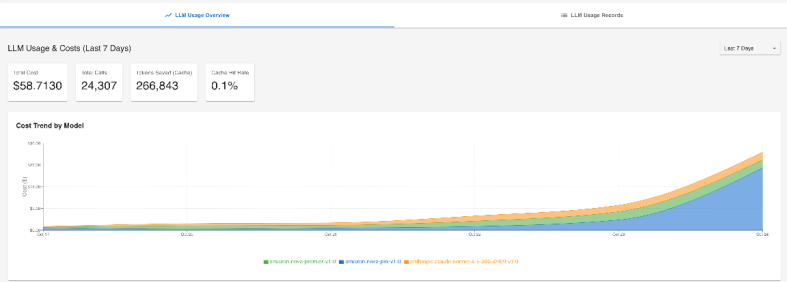

LLM Usage and Costs

LLM Usage Attribution from ModelOptimizer.ai™

Resolving the LLM usage and cost detail disconnect requires precise visibility. This includes tying usage and related costs back to the business initiative(s) that triggered it.

Eventually, this expands to user-level tracking, allowing businesses to connect usage patterns and token costs directly to individual contributors, departments, or automated agents.

ModelOptimizer.ai™ provides full attribution of LLM activity by:

- Processing Environment (e.g., Dev, QA, Prod)

- Microservice or Application that initiated the call

- Model or Model Family used to process the request

- Account and Sub-Account involved in the interaction

Integrating With Bedrock

The core LLM proxy technology of ModelOptimizer.ai™ integrates with Bedrock on both the prompt detail (usage) and from the cost (billing) side. It handles request routing, error retries and caches responses in S3.

By centralizing all LLM interactions, we can uniformly manage configuration, enforce access controls, and easily introduce optimizations such as dynamic model selection or token-level cost reduction in the future. Metadata currently included in the proxy layer are account/subaccount ID and calling service. The meta data layer is easily expanded.

ModelOptimizer.ai™ Output

The default instance of Optimizer provides a dashboard of both summary and filtered views of LLM usage by processing environment, by service, by model and by account.

Custom views will include analyses by user/agent for monitoring and resource management. Project, budget and/or initiative meta data can possibly be mapped to the proxy layer for measurement of contribution ROI and for training purposes.

AI’s Cost of Unmeasured Intelligence

AI adoption across enterprise environments is expanding, but even when the outcomes are positive, companies are unable to attribute usage and cost to specific users, projects, products, or business functions.

Most critically, businesses struggle to answer the core question: what is the return on this AI initiative?